- Word count: 901

- Average reading time: 4 minutes and 30 seconds (based on 200 WPM)

When

July 2016

What

An attempt to localize and extract the back numbers of cyclists in images. An implementation of the Cascade Classifer from OpenCV for localizing back numbers in under 6 hours. For example one of the images the classifier would be working with looks as follows:

The goal was to localize and read the back numbers from the images. As can be seen in the above picture this poses a tricky task as the characters are often contorted. Neverthelss I was hopeful and always up for a challenge - though I didn't expect to solve it I wanted to see how far I could come to attaining this goal.

Why

I had a nice talk with Ordina and asked them if they had any cases lying around that needed solving, not expecting that I would simply solve it in a days work but more an attempt to demonstrate how I'd tackle the problem given a day of work. I also was curious about what kind of cases they were working on and wanted to learn more about their SMART department.

How

Having done the [ANPR] project already I figured I could use some of the lessons learned from that and apply it here to generate a nice labelled training set and / or use it as a detection algorithm in itself. However, the algorithm didn't work well, if at all. It showed up a lot of false positives or didn't find anything. Having spend a couple of hours on this attempt I needed to decide how to proceed:

- Continue attempting

- Start labelling by hand

Thinking about how much time I spend on the ANPR project and how little time I had left at this point. I decided the most reliable way forward would be to go over to hand labelling. Having no images whatsoever I first needed to gather some images for training. After almost an hour of googling I gathered approx. 70 good images. This was very little to work with. As it turned out it is quite difficult to find enough cyclist images photographed from behind or the side. Despite the low amount of images, a lot of these images had multiple cyclists in the photograph.

Hand labelling

Having been made aware of the great label tool Sloth through the Whale recognition challenge I installed the program and got to work. I labelled both the backnumbers and and heads of cyclists to train two different models: cyclists always ride with a helmet and they all look roughly the same and not so much like any other object. This made for a good working identifier, or so I hoped. With the helmet detection model I aimed to reinforce the backnumber detection model.

After labelling the collected images I ended up with two tiny datasets I could use to train the two models with.

For this post I quickly wrote this function to illustrate how tiny the datasets actually are:

def cast_img_list_into_mosaic(img_list):

img_size = 32

square_size = int(math.ceil(len(img_list)**0.5))

mass_img = np.zeros((img_size*square_size + 1, img_size*square_size + 1, 3))

for i in range(square_size):

for j in range(square_size):

if i*square_size + j < len(img_list):

img = img_list[i*square_size + j]

resized_img = misc.imresize(img, (img_size, img_size, 3))

mass_img[i*img_size:(i+1)*img_size, j*img_size:(j+1)*img_size, :] = resized_img

else:

break

return mass_img

to cast them into one single image. These images illustrate what the datasets look like.

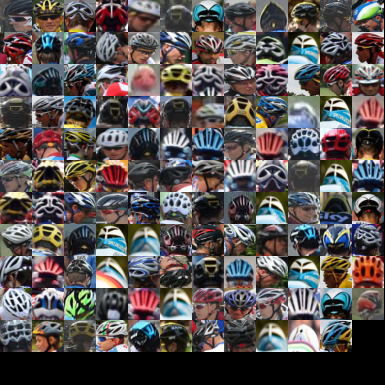

Head dataset:

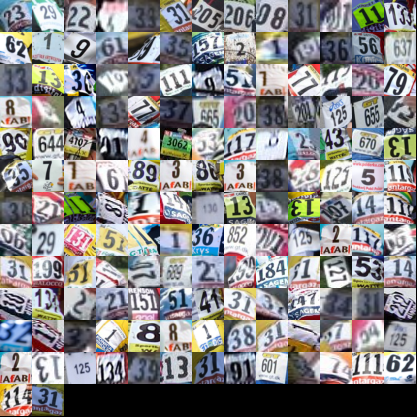

Backnumber dataset:

There are a lot of methods to make a dataset artificially larger whilest not losing accuracy. For example, skewing the image in both directions and rotating the image slightly multiple times. With these methods one could grow a dataset several times larger than it originally was - for the cost of overfitting. I decided not to do this at the time because I was running out of time and one of the functions I knew to rotate the images would introduce artifacts. Artifacts on images the size of 15x15 would be disastrous I thought.

Two seperate models

With such a small amount of training samples I didn't expect much from the models, a lot of false positives were bound to happen. The reason I trained two models instead of one is to compensate for the small size of the model. I figured that if a head is detected but no back number, than the model will look again with different parameters in a region under the head, under the assumption that a cyclist isn't upside down.

I turned on the model to be trained, which would last several hours, and proceded forward with an attempt to rotate the found images. With one hour left on the clock I spend this last hour on an attempt to figure out a way to find the angle needed to rotate the backnumbers with for a steady recognition. Though I did already found a way to extract the found backnumbers and that was by fitting a model on this housenumber dataset. In this model I would induce artifacts to mimic the contorted backnumbers in the hope of improving the recognition results. Having spen This is were this project ended. The next day I would be able to see how good (or bad) the models would perform.

The result of the models

The result is interesting for the amount of samples used for training. I did not have a lot of images to test the models on but the one thing that stood out is that both models almost always found the cyclists - littered with false positives - but cyclists nevertheless!

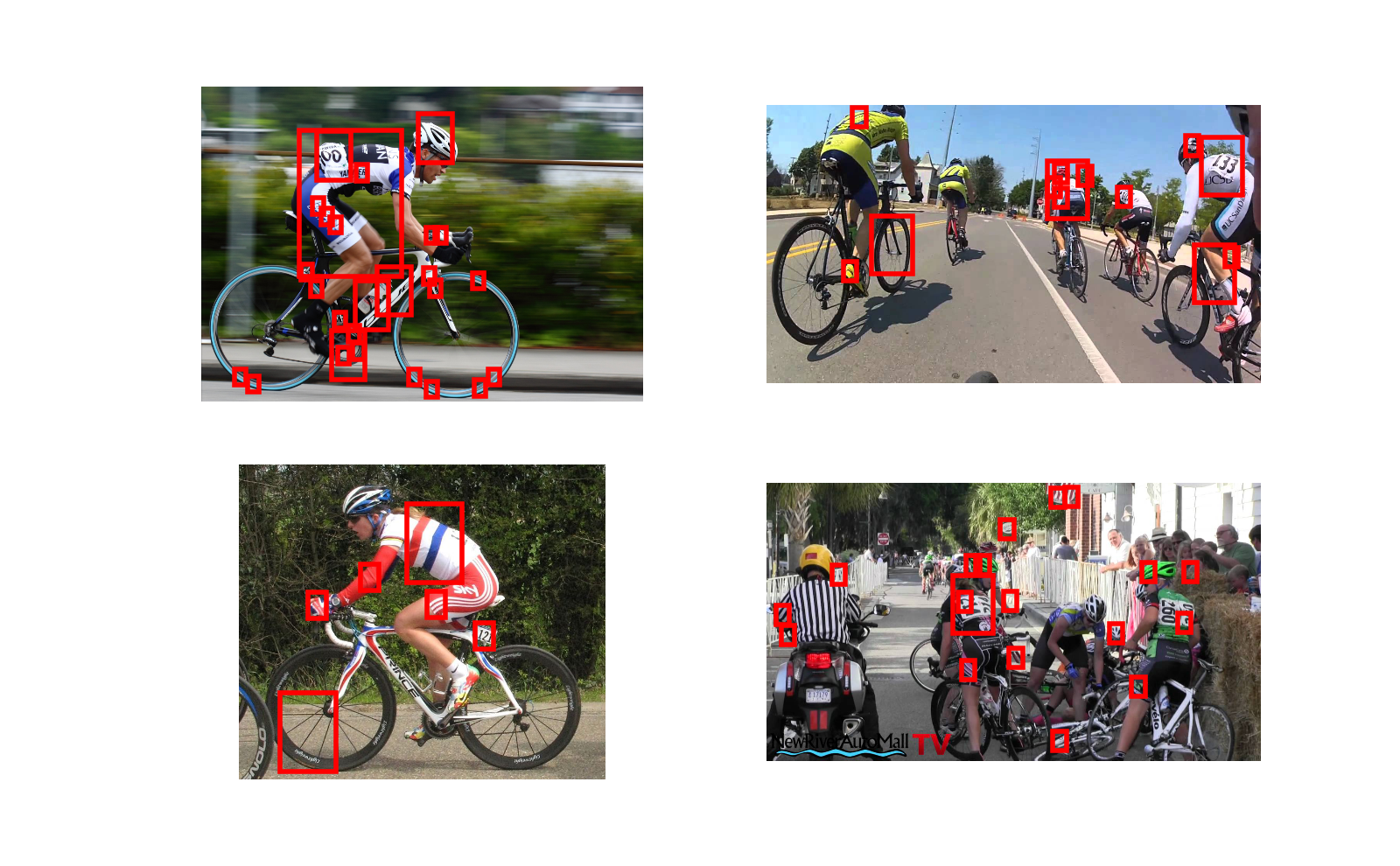

The backnumber model finds almost every backnumber though not always cut off nicely enough, see for yourself:

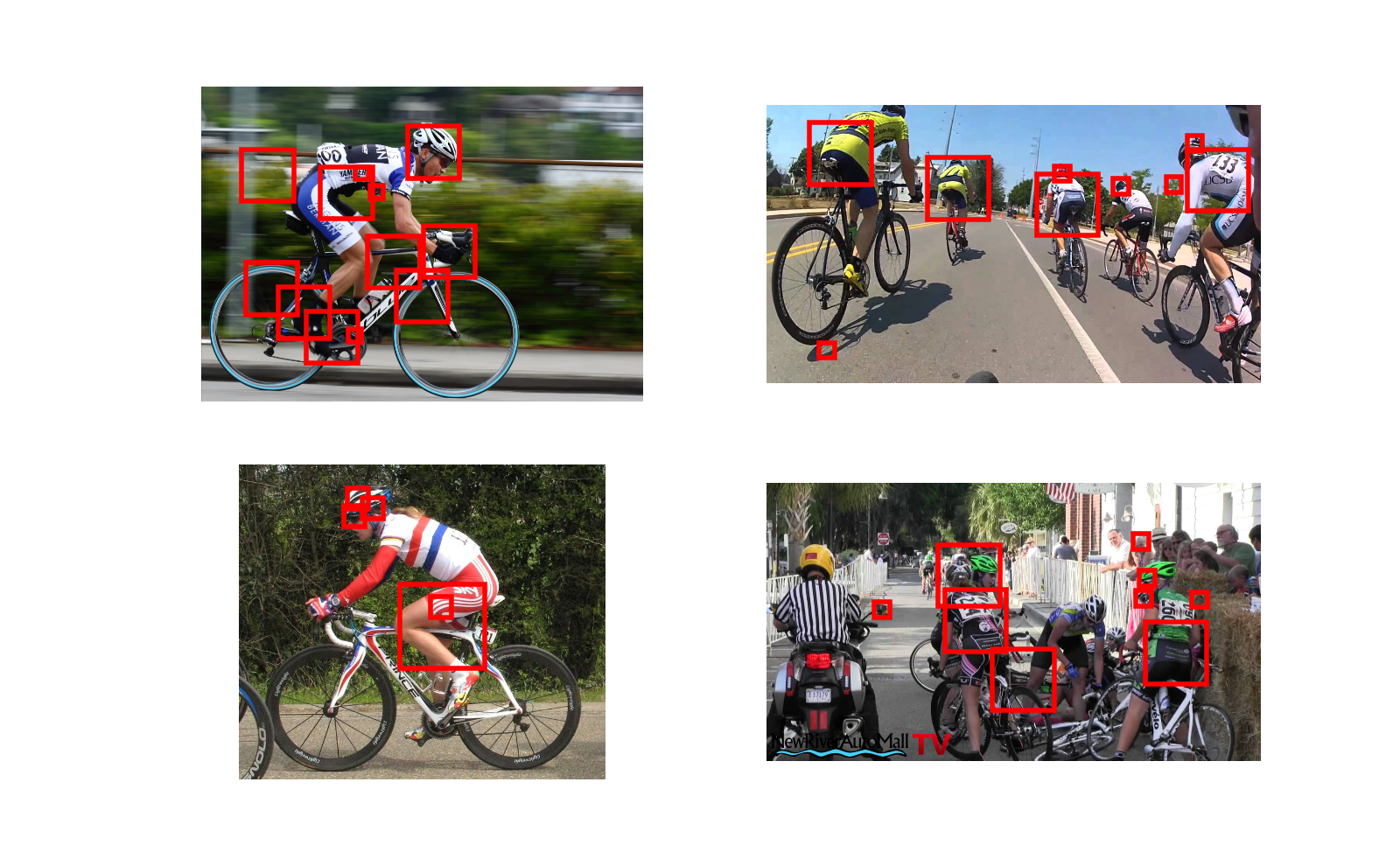

The head model

It was a fun experience.